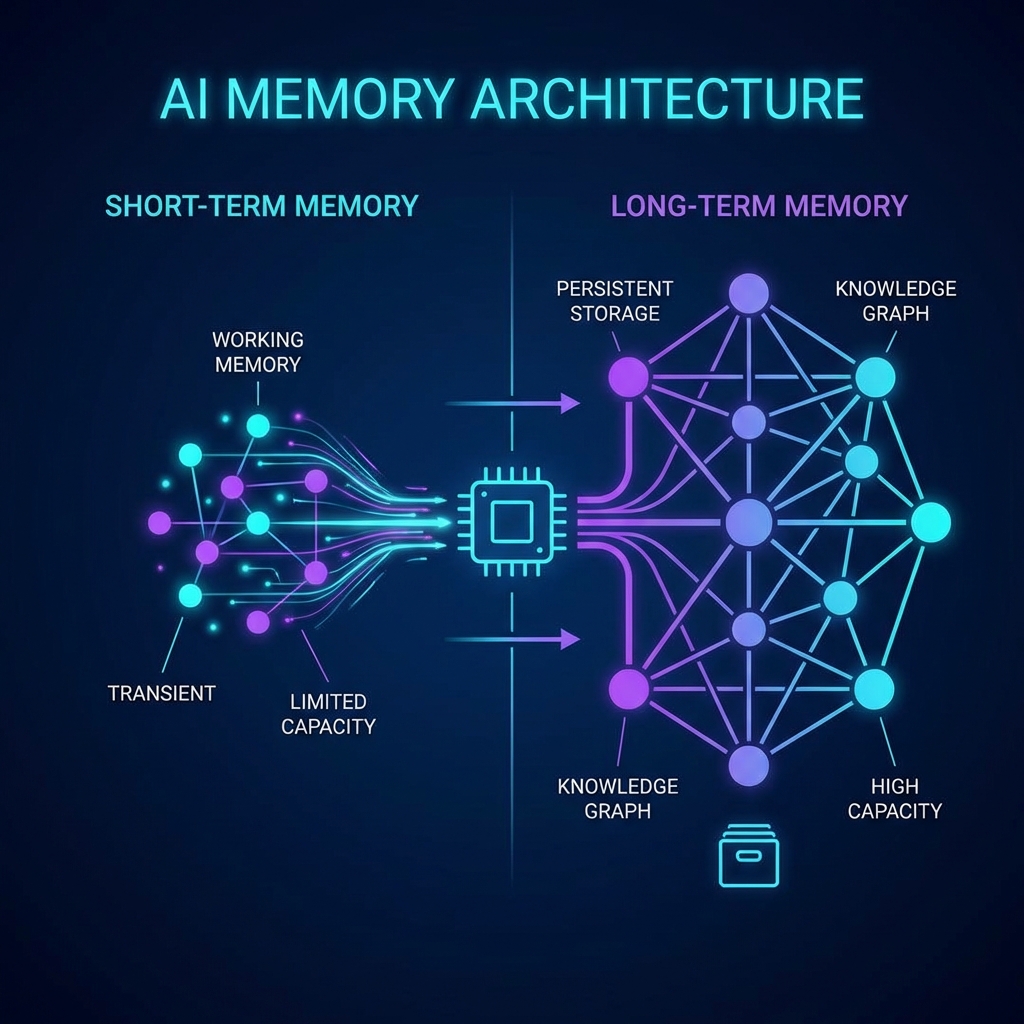

Before developing any AI agent, it is fundamental to define the context retention strategy. Memory is not just data storage; it is the backbone of the agent's "personality" and utility. We must ask ourselves the following about our application:

- Short-term Memory? Is it necessary to ensure continuity and fluidity of the immediate conversation?

- Long-term Memory? Does the agent need to remember facts from past sessions?

- Granularity? Should it store precise details or just the general concept?

- Retrieval? How will this information be stored, searched, and used?

Practical Example: Pam - Friendly AI Teacher

Figure 1: Pam, an AI agent designed to help users practice languages via WhatsApp.

The goal of Pam is to act as a friend conversing with you, a friend who has extensive language knowledge and suggests corrections whenever there is an opportunity.

In a conversation like this, an exact database with details of every subject is not necessary. Since the goal is to make the user speak and practice, I needed a history with only relevant information about the user, provided by them during the conversation: name, profession, hobbies, recent activities, music taste, etc.

answering the questions for Pam:

- Yes (Short-term): Configured so at least the last 10 messages remain intact to maintain flow.

- Yes (Long-term): Necessary for personalization.

- No (Details): It can be a memory that vaguely remembers information brought up by the user (summaries).

- Strategy: Summarized memory works, always passing the accumulated summary and the last few conversations to the LLM.

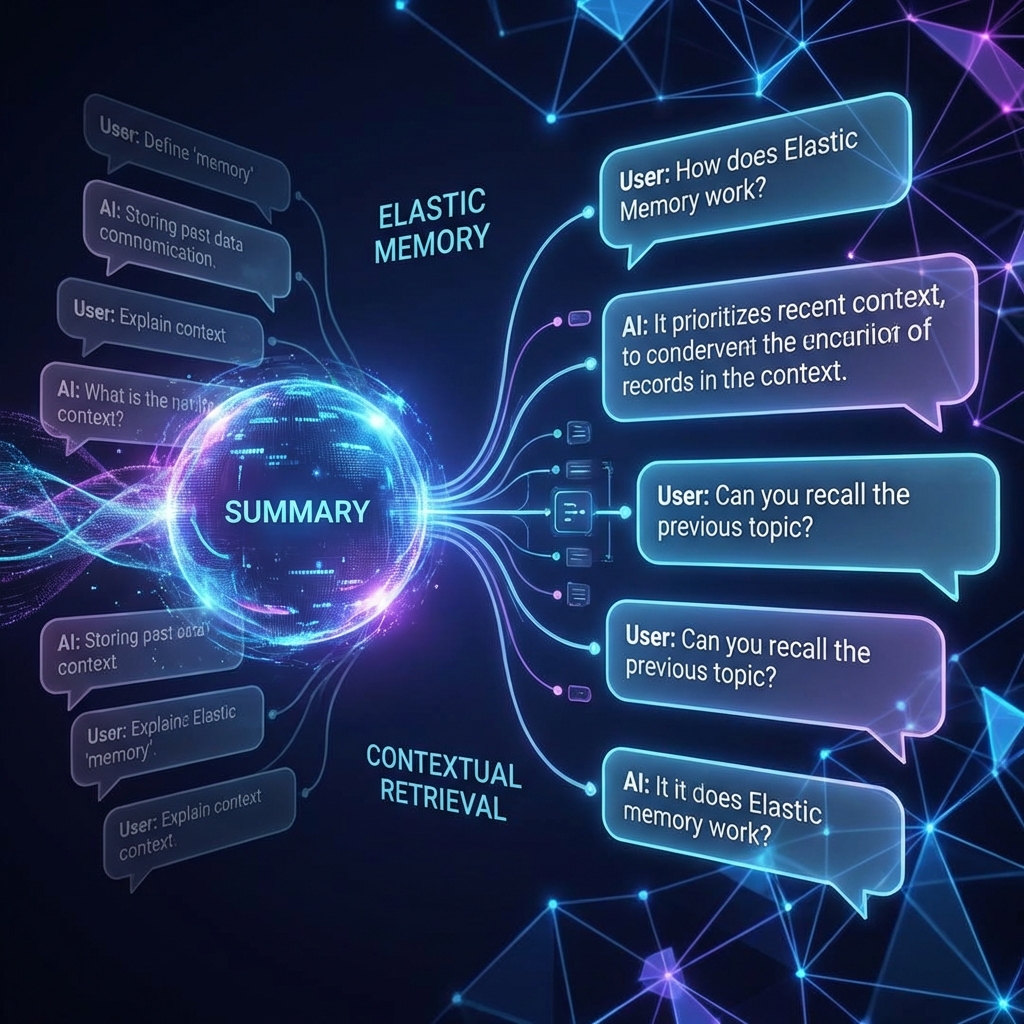

The Solution: Elastic Memory

In this case, I developed an "Elastic Memory" architecture. It functions as an intelligent buffer that adapts as the conversation evolves, managed directly by LangGraph.

The dynamic works as follows:

- The conversation starts with only the system prompts.

- As the user converses, new messages fill the context window.

- When a token threshold is reached, the conversation is processed.

- The system generates a summary of everything except the last X messages (to ensure the AI doesn't "forget" what was just said).

- A new memory is instantiated containing:

[Previous Summary + New Recent Messages].

Benefits of this approach

- Conversation fluidity maintained

- Low token usage (Cost Reduction)

- Low response latency

- Simplified management

- Low storage cost

- Simple encapsulation in LangGraph